"What do you see right now?” a man asks a humanoid robot standing behind a table. “I see a red apple on a plate in the centre of the table, a drying rack with cups and a plate, and you standing nearby, with your hand on the table,” the robot replies. “Great—can I have something to eat?” says the man. “Sure thing,” says the robot. It flexes its arms, picks up the apple with its right hand, passes it to its left hand, and then hands it to the man. He asks the robot to explain its actions. “So I gave you the apple, because it’s the only, er, edible item I could provide you with from the table,” it replies.

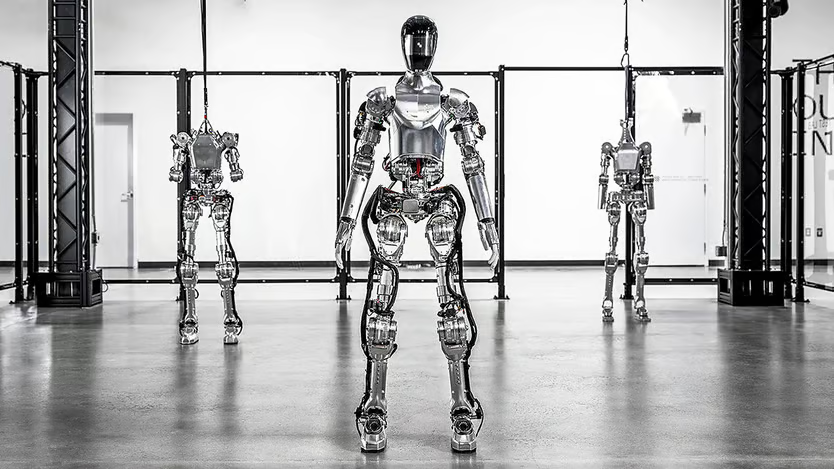

This demonstration, shown in a video released by Figure, a robotics startup, in March, caused widespread amazement. It shows a robot that can hold spoken conversations, recognise and manipulate objects, solve problems and explain its actions. And Figure is not alone in producing such impressive results. After years of slow progress, robots suddenly seem to be getting a lot cleverer. What changed?

The magic ingredient is artificial intelligence (ai). Academic researchers, startups and tech giants are taking advances in ai, such as large language models (llms), speech synthesis and image recognition, and applying them to robotics. llms are known for powering chatbots like Chatgpt—but it turns out that they can help power real robots, too. “The algorithms can transfer,” says Peter Chen, chief executive of Covariant, a startup based in Emeryville, California. “That is powering this renaissance of robotics.”

The robot in Figure’s video had its speech-recognition and spookily lifelike speech-synthesis capabilities provided by Openai, which is an investor in the company. Openai shut down its own robotics unit in around 2020, preferring instead to invest in Figure and other startups. But now Openai has had second thoughts, and in the past month it has started building a new robotics team—a sign of how sentiment has begun to shift.

A key step towards applying ai to robots was the development of “multimodal” models—ai models trained on different kinds of data. For example, whereas a language model is trained using lots of text, “vision-language models” are also trained using combinations of images (still or moving) in concert with their corresponding textual descriptions. Such models learn the relationship between the two, allowing them to answer questions about what is happening in a photo or video, or to generate new images based on text prompts.

Wham, bam, thank you VLAM

The new models being used in robotics take this idea one step further. These “vision-language-action models” (vlams) take in text and images, plus data relating to the robot’s presence in the physical world, including the readings on internal sensors, the degree of rotation of different joints and the positions of actuators (such as grippers, or the fingers of a robot’s hands). The resulting models can then answer questions about a scene, such as “can you see an apple?” But they can also predict how a robot arm needs to move to pick that apple up, as well as how this will affect what the world looks like.In other words, a vlam can act as a “brain” for robots with all sorts of bodies—whether giant stationary arms in factories or warehouses, or mobile robots with legs or wheels. And unlike llms, which manipulate only text, vlams must fit together several independent representations of the world, in text, images and sensor readings. Grounding the model’s perception in the real world in this way greatly reduces hallucinations (the tendency for ai models to make things up and get things wrong).

Dr Chen’s company, Covariant, has created a model called rfm-1, trained using text, images, and data from more than 30 types of robots. Its software is primarily used in conjunction with “pick and place” robots in warehouses and distribution centres located in suburban areas where land is cheap, but labour is scarce. Covariant does not make any of the hardware itself; instead its software is used to give existing robots a brain upgrade. “We can expect the intelligence of robots to improve at the speed of software, because we have opened up so much more data the robot can learn from,” says Dr Chen.

Using these new models to control robots has several advantages over previous approaches, says Marc Tuscher, co-founder of Sereact, a robotics startup based in Stuttgart. One benefit is “zero-shot” learning, which is tech-speak for the ability to do a new thing—such as “pick up the yellow fruit”—without being explicitly trained to do so. The multimodal nature of vlam models grants robots an unprecedented degree of common sense and knowledge about the world, such as the fact that bananas are yellow and a kind of fruit.

Bot chat

Another benefit is “in-context learning”—the ability to change a robot’s behaviour using text prompts, rather than elaborate reprogramming. Dr Tuscher gives the example of a warehouse robot programmed to sort parcels, which was getting confused when open boxes were wrongly being placed into the system. Getting it to ignore them would once have required retraining the model. “These days we give it a prompt—ignore open boxes—and it just picks the closed ones,” says Dr Tuscher. “We can change the behaviour of our robot by giving it a prompt, which is crazy.” Robots can, in effect, be programmed by non-specialist human supervisors using ordinary language, rather than computer code.Such models can also respond in kind. “When the robot makes a mistake, you can query the robot, and it answers in text form,” says Dr Chen. This is useful for debugging, because new instructions can then be supplied by modifying the robot’s prompt, says Dr Tuscher. “You can tell it, ‘this is bad, please do it differently in future.’” Again, this makes robots easier for non-specialists to work with.

Being able to ask a robot what it is doing, and why, is particularly helpful in the field of self-driving cars, which are really just another form of robot. Wayve, an autonomous-vehicle startup based in London, has created a vlam called Lingo-2. As well as controlling the car, the model can understand text commands and explain the reasoning behind any of its decisions. “It can provide explanations while we drive, and it allows us to debug, to give the system instructions, or modify its behaviour to drive in a certain style,” says Alex Kendall, Wayve’s co-founder. He gives the example of asking the model what the speed limit is, and what environmental cues (such as signs and road markings) it has used to arrive at its answer. “We can check what kind of context it can understand, and what it can see,” he says.

As with other forms of ai, access to large amounts of training data is crucial. Covariant, which was founded in 2017, has been gathering data from its existing deployments for many years, which it used to train rfm-1. Robots can also be guided manually to perform a particular task a few times, with the model then able to generalise from the resulting data. This process is known as “imitation learning”. Dr Tuscher says he uses a video-game controller for this, which can be fiddly.

But that is not the only option. An ingenious research project at Stanford University, called Mobile aloha, generated data to teach a robot basic domestic tasks, like making coffee, using a process known as whole-body teleoperation—in short, puppetry. The researchers stood behind the robot and moved its limbs directly, enabling it to sense, learn and then replicate a particular set of actions. This approach, they claim, “allows people to teach arbitrary skills to robots”.

Investors are piling in. Chelsea Finn, a professor at Stanford who oversaw the Mobile aloha project, is also one of the co-founders of Physical Intelligence, a startup which recently raised $70m from backers including Openai. Skild, a robotics startup spun out of Carnegie Mellon University, is thought to have raised $300m in April. Figure, which is focusing on humanoid robots, raised $675m in February; Wayve raised $1.05bn in May, the largest-ever funding round for a European ai startup.

Dr Kendall of Wayve says the growing interest in robots reflects the rise of “embodied ai”, as progress in ai software is increasingly applied to hardware that interacts with the real world. “There’s so much more to ai than chatbots,” he says. “In a couple of decades, this is what people will think of when they think of ai: physical machines in our world.”

As software for robotics improves, hardware is now becoming the limiting factor, researchers say, particularly when it comes to humanoid robots. But when it comes to robot brains, says Dr Chen, “We are making progress on the intelligence very quickly.”

Copyright : Economist.